Visual Attention and Perception

People

Welinton Yorihiko Lima Akamine

Rodrigo Pena

Guilherme Victor Ramalho Natal

Description

|

When observing a scene, the human eye typically filters the large amount of visual information available on the scene and attend to selected areas. Oculo-motor mechanisms allow the gaze of attention to either hold on a particular location (fixation) or to shift to another location when sufficient information has already been collected (saccades). The selection of fixations is based on the visual properties of the scene. Priority is given to areas with a high concentration of information, minimizing the amount of data to be processed by the brain while maximizing the quality of the collected information. Visual attention is characterized by two mechanisms known as bottom-up and top-down attention selection. |

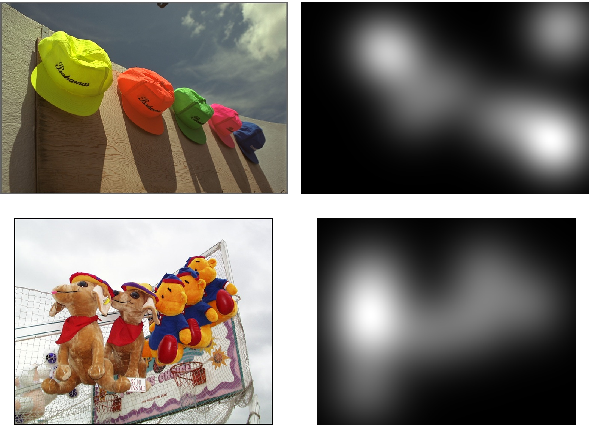

The analysis of how humans perceive scenes can be performed by tracking eye movements in subjective experiments using eye-tracker equipments. From this type of experiment, gaze patterns are collected and later post-processed to generate saliency maps (see images above). Although subjective saliency maps are considered as the ground-truth in visual attention, they cannot be used in real-time applications. To incorporate visual attention aspects into the design of video quality metrics, we have to use visual attention computational models to generate objective saliency maps.

The goal of this project is to incorporate aspects of visual attention into the design of regular video processing algorithms (such as coders, quality metrics, etc.). In particles, we are interested in understanding how attention affects quality judgements and the annoyance caused by artifacts. We aim to how use this knowledge into the design of better video quality metrics.

We investigated the benefit of incorporating objective saliency maps into image quality metrics. We compared the performance of the original quality metrics with the performance of quality metrics that incorporate saliency maps generated by different bottom-up visual attention models. Our results show that visual attention was able to improve the performance of the image quality metrics tested.

Currently, we are investigating the benefit of incorporating computational saliency maps generated into video quality metrics.

Publications

Akamine, Welington Yorihiko Lima ; Farias*, Mylène C. Q. . Video quality assessment using visual attention computational models. Journal of Electronic Imaging (Print), v. 23, p. 061107, 2014.

Akamine, Welington Yorihiko Lima ; Farias, Mylène C. Q. The Added Value of Visual Attention in Objective Video Quality Metrics. In: Eighth International Workshop on Video Processing and Quality Metrics for Consumer Electronics, 2014, Chandler, AZ. International.

Akamine, Welington Yorihiko Lima ; Farias, Mylène C. Q. . Incorporating visual attention models into video quality metrics. In: Image Quality and System Performance XI, IST/SPIE Electronic Imaging, 2014, San Francisco, v. 9016.

REDI, JUDITH ; Heynderickx, Ingrid ; MACCHIAVELLO, BRUNO ; FARIAS, MYLENE . On the impact of packet-loss impairments on visual attention mechanisms. In: 2013 IEEE International Symposium on Circuits and Systems (ISCAS), 2013, Beijing, v.1, pp. 1107.

Mylène C.Q. Farias and Welington Y.L. Akamine, On performance of image quality metrics enhanced with visual attention computational models, Electron. Letters, 24 May 2012, Vol. 48, Issue 11, p.631–633.

Welington Y.L. Akamine and Mylène C.Q. Farias, Incorporating Visual Attention Models into Image Quality Metrics, Workshop on Video Processing and Quality Metrics, Phoenix, Arizona, 2012.

References

L. Itti and C. Koch, “Computational modelling of visual attention,” Nature Reviews Neuroscience, vol. 2, 2001, p. 194–203.